+--------+ +--------+ +--------+

| Public | | WAF | | ILB |

|Internet| <--> | | <--> | (AKS) |

+--------+ +--------+ +--------+

| | |

| | |

+--------+ +--------+

| | | AKS |

| Hub | |Service |

| | +--------+

+--------+

| Azure |

|Firewall|

+--------+In an earlier post we went over some of the “out of the box” issues with naturally provisioned AKS clusters, when building out a typical Hub and Spoke. In this post we will examine some of the configuration we can use when creating your clusters. Keeping your application data and DNS symmetric and passing through your security appliance.

Concept

We are not going to cover CNI directly in this post. CNIs are already covered in great detail here. The setup below will work with both Azure CNI and Kubenet. I will cover Overlay and Cilium CNI options in another post.

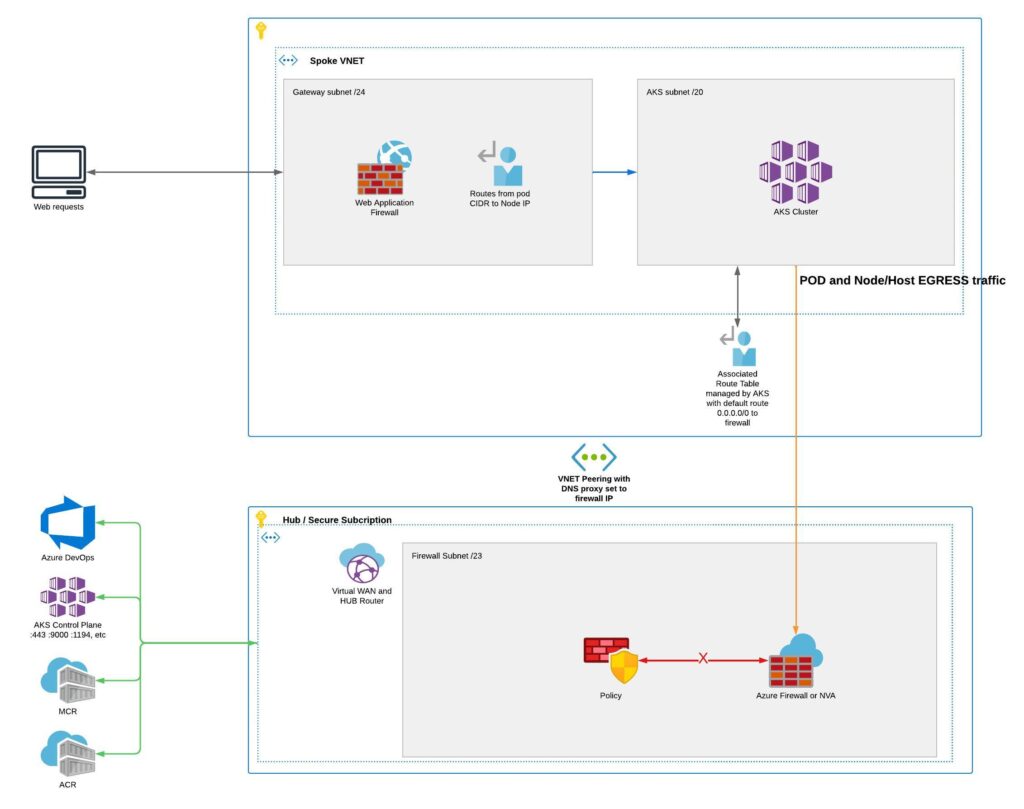

We have our fun ASCii diagram above. Below we have a more detailed plan of how we want our traffic to flow;

Outbound Type

Now, lets start with the Terraform we will need to actually provision the cluster with the required network changes, one of the key setting is called «outboundType» (ARM) or «outbound_type» in Terraform. By default this is set to «loadBalancer», we need to change this to «userDefinedRouting» as below:

network_profile {

load_balancer_sku = var.lb_sku

network_plugin = var.aks_plugin

network_policy = var.aks_policy

service_cidr = var.aks_service_cidr

dns_service_ip = var.aks_dns_service_ip

outbound_type = "userDefinedRouting"

}Also, when we set «userDefinedRouting» as «outbound_type» this will remove the AKS managed routing for egress traffic, which will normally exit the load balancer directly to the internet. Referred to as «managedOutboundIPs» in Azure Resource Manager (ARM).

User Assigned Identity

Next you will need to associate a route table to the AKS subnet or the Azure API will not allow the creation of the cluster. We also need to make sure the identity type is «UserAssigned».

One more step, you will need to assign the cluster roles manually to the «User Assigned Managed Identity«. This will give the AKS Identity access to the required resources.

resource "azurerm_user_assigned_identity" "aks_identity" {

name = var.identity_name

resource_group_name = var.resource_group_name

location = var.location

}

identity {

type = "UserAssigned"

identity_ids = [

azurerm_user_assigned_identity.aks_identity.id

]

}

Route Table

resource "azurerm_route_table" "aks_default_route" {

name = "example-routetable"

location = var.location

resource_group_name = var.resource_group_name

route {

name = "default"

address_prefix = "0.0.0.0/0"

next_hop_type = "VirtualAppliance"

next_hop_in_ip_address = "10.160.1.150"

}

}

resource "azurerm_subnet_route_table_association" "aks" {

subnet_id = var.subnet_id_of_aks

route_table_id = azurerm_route_table.aks_default_route.id

}So this is the basic building blocks of a cluster that can route traffic through Azure firewall or you NVA. I would recommend using a Palo Alto series firewall it can be installed from Azure marketplace. One note is that the «next_hop_in_ip_address» argument is the actual IP of the firewall. This will/should of course be in a VNET peered Security/Hub subscription.

In addition you will need to create firewall openings to Azure resources before you start building in terraform. The firewall requirements can be found here.

DNS Proxy

One last piece of the puzzle concerns the spoke VNET. We want to be able to see the full FQDN DNS requests coming from the pods. This will allow you to us application rules if you are using Azure firewall. Thus greatly increasing visibility of the queries made by the running containers. This is a one time setup, so can be done in the portal. We need to change the DNS servers of the VNET to the firewall IP. Example firewall below is set to «10.160.1.150», so we have used that below too:

resource "azurerm_virtual_network" "aks-vnet" {

name = var.name

address_space = [var.ip_address_space]

resource_group_name = var.resource_group_name

location = var.location

dns_servers = ["10.160.1.150"]

}Remember to enable the DNS proxy on your firewall to get absolute visibility into all traffic exiting your AKS cluster.

Hope you enjoyed this, a lot more AKS articles in the making! If your organisation need help or support please feel free to reach out to Sicra AS!